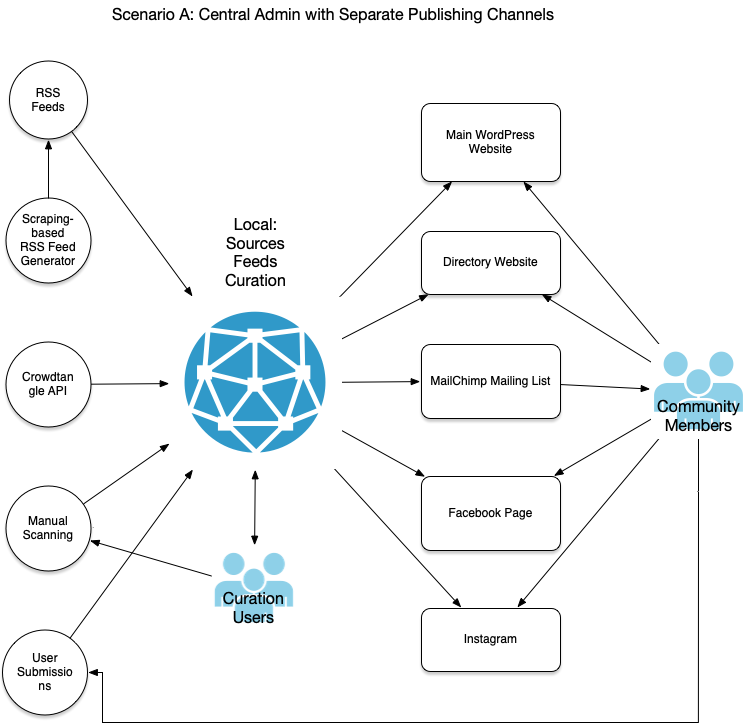

While working on the software code I’ve been keeping a list of changes over time, using the software tracking tool git. I thought I’d share that “changelog” here for posterity, as I won’t include it in the initial “release” of the software package for public consumption.

These entries may not be entirely clear as they’re usually shorthand summaries of the work that’s just been done. Sometimes they represent just a line or two of code changes, and sometimes they represent many new functions and features coming in all at once. But here they are (in reverse chronological order as of today) in case they’re useful:

2022-04-09

- Add but don’t use a welcome widget, need to work out width issues

- News item trend chart

- Latest news dashboard widget and starred feed activity count

- Remove default account widget

- Initial setup for dashboard stat widgets

- Reorder sidebar for daily workflow

- Enable dark mode and collapsible sidebar on desktop

- Add Filament config to our package’s published configs for simplicity

- Don’t show activity section in create feed form

- Disable manual creation of news items

- Specify feed check freq when importing, better docs for import/export

- Default to only last 7 days of news, show more records per page

- Make news items globally searchable with useful result formatting

- Create artisan command, job and schedule for checking bulk feeds

- Make it look better on smaller devices

- Align image center within container

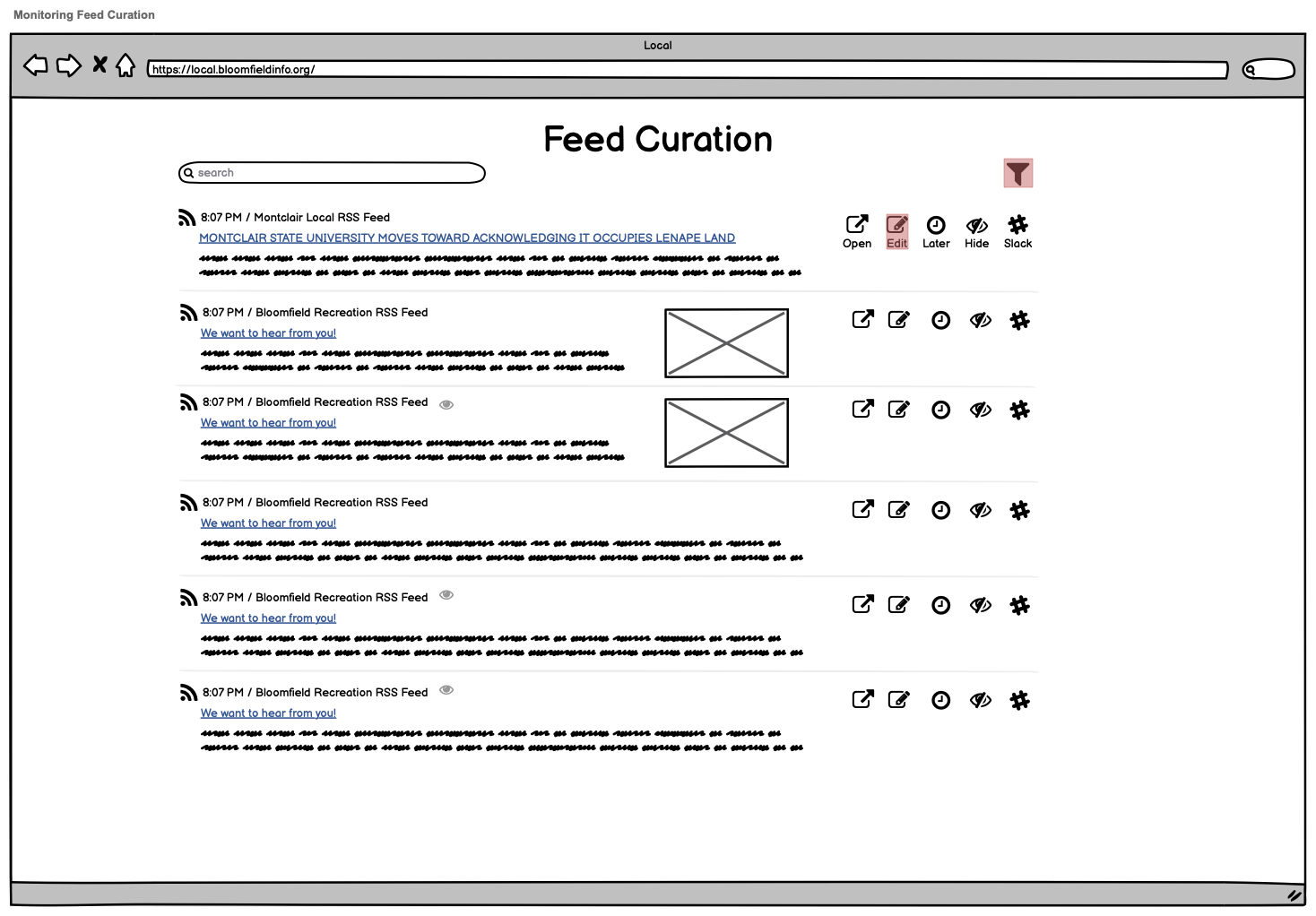

- WIP: news item media display in curation view

- Support different title generation methods, move fetch history timeframe to config

- Allow for apparently ridiculously long article URLs

- Include parent methods in configuring and booted so Filament can load Livewire

2022-04-08

- Refactor base class structure for better reusability of last check/update functions

- Docs

- Docs and clarifications

2022-04-07

- Icon update for FB items until we can get a real one in there

- Include feed names in curation view

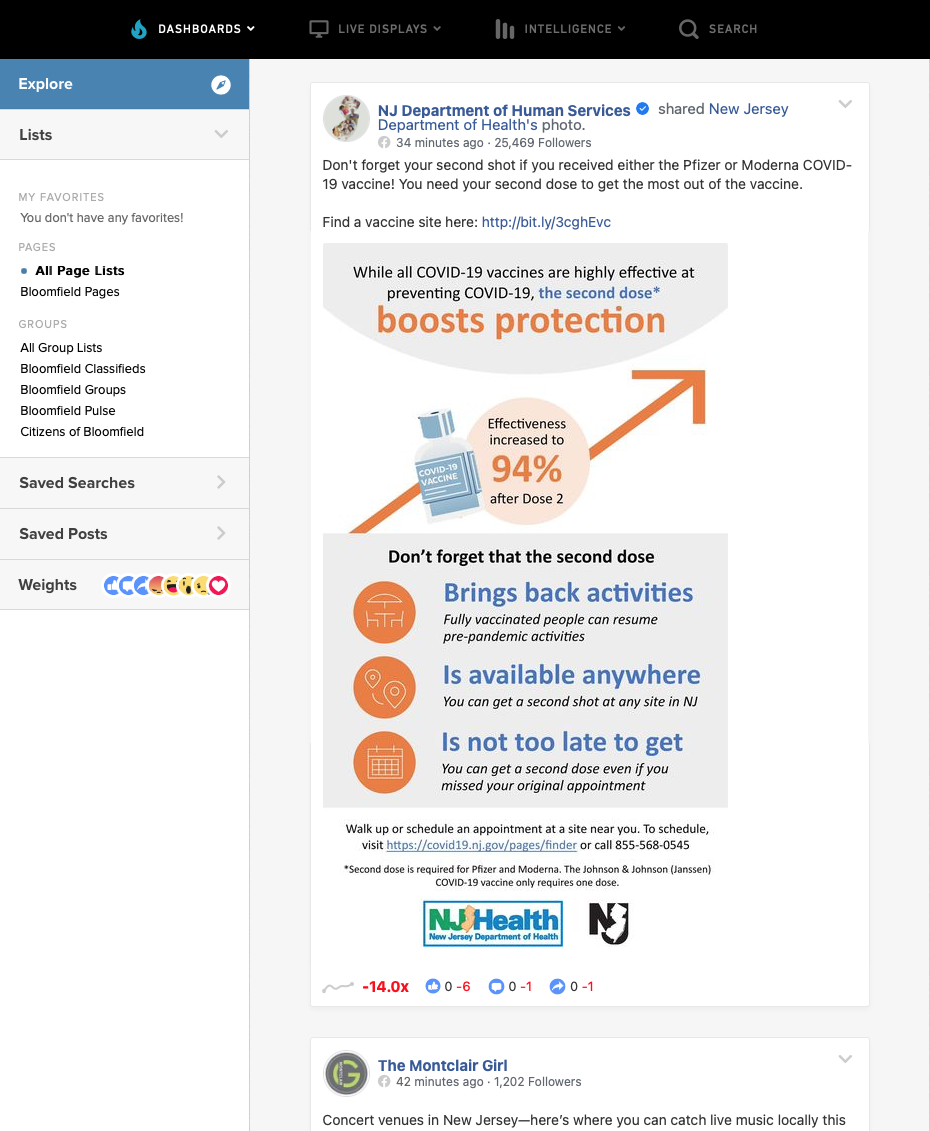

- Basic FB post fetching is working

- WIP: adding bulk feed handling

2022-04-06

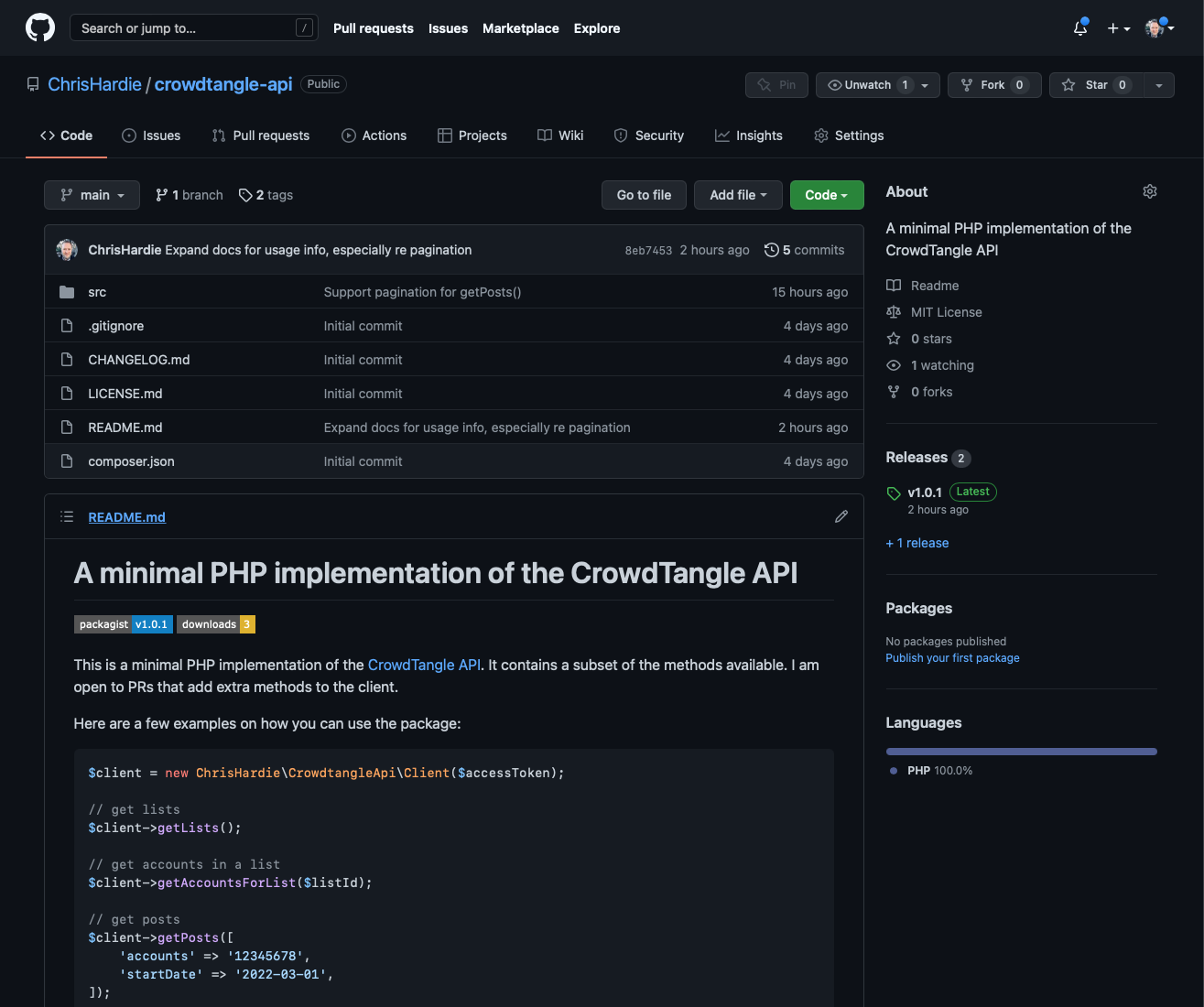

- Start adding some better documentation in README and config

- Set up package command scheduling

- Add console command to refresh Crowdtangle feeds

2022-04-04

- Action to refresh/create feeds from Facebook Pages and Groups

- Basic setup for Crowdtangle API access

2022-04-03

- Basic news item index tests

- Add livewire test to check Source creation through Filament

- Ridiculous scaffolding to support authenticated users when testing Filament views

- Load Filament and base laravel migrations so we can test Filament

- Maybe help testbench with package discovery

- Add example app key to phpunit for local testing

- Include Livewire service provider so tests will pass

- Included deleted feeds and sources for purposes of relationship fetch

- Visually indicate that feeds are failing in the table

- Have content curation record URLs go to the article in a new window

- Expand length of media_url field to accomodate ridiculous image URLs

- Add feed export command

- Feed importing is working

- Initial setup for feed importing